Piotr Woźniak 1990

| This text was taken from P. A. Wozniak, Optimization of Learning, Master’s Thesis, University of Technology in Poznan, 1990 and adapted for publishing as an independent article on the web (P. A. Wozniak, M. K. Hejwosz, Jul 21, 2010).Please note that this text is pretty outdated and boldly hypothetical. It has been posted here only as a result of unending requests from users of SuperMemo and not only. It should not be taken as a reflection of the present position of Dr Wozniak or SuperMemo World on the topics considered. It is placed here on the web for archival purposes only. Minor corrections, esp. linguistic errors, have been introduced to make the text easier to read.Before reading, take note, that the hypothetical concept of can roughly be substituted with a well-defined phenomenon of procedural learning as long as it fits the basic presumption: in stochastic learning, it is the brain that needs to figure out the synaptic connections that need to be reinforced (basically via trial-and-error). Throughout the text stochastic learning is set in opposition to deterministic learning, which roughly corresponds with declarative learning. |

In this chapter I shall start from presenting basic facts and theories concerning biochemistry and physiology of memory. They should provide a substance for construction of a molecular model of memory that would combine known neurochemical data with the quantitative properties of the memory that are implied by the model of optimal learning used by the SuperMemo method. The chapter is addressed to people with an established interest in neuroscience and, in consequence, requires some knowledge of fundamental biochemistry and physiology of the nervous system. The biological terminology, except for newly introduced concepts, is not included in the glossary at the end of the thesis.

10.1. Location and character of memory

Some physiologists believe that all forms of learning can be explained in terms of classical conditioning, others tend to exclude from that area intellectual activities that are described by an enigmatic term higher thinking. However, for an attentive student of the mechanisms of evolution it is quite obvious that mechanisms involved in human memory and human thinking are based on the same principles as those that lead a rat to a waterspout or a cat to the act of copulation. A natural characteristic of scientists to locate the studied phenomena in order to narrow the focus of attention was reflected in early studies of memory by attempts to find that part of the central nervous system that would be responsible for storage and recollection of memories (the pertinence of the nervous system itself has never been disputed). This led Lashley in 1950 to discover that memory does not have any special location in the cerebral cortex. Decorticated animals evinced increasing loss of learning capabilities with increased extent of the lesion, while the specific location of the removed area was less relevant. However, it was also reported that bilateral damage to the medial temporal cortex caused particularly dramatic memory impairment. Similarly, patients suffering from the Korsakoff’s syndrome, caused by abuse of alcohol and characterized by damage to the mammillary bodies, revealed striking memory loss. In both cases, affected individuals demonstrate symptoms of anterograde amnesia (inability to form new memories). This suggested a special importance of the damaged structures of the brain in the formation of memory. In resulting series of research it was found that injuries to various structures related to the temporal lobes and limbic system cause serious memory deficits. Such formations as the hippocampus, amygdala, temporal stem, mammillary bodies, dorsal thalamic nuclei, cingulate gyri, fornix and others were the most intensively studied. The research indicated special importance of the continuity of the Papez circuits of the limbic system which were supposed to conduct reverberating impulsation, proposed by Hebb (1949) as the basis of the short term memory. However, the results of experiments were fraught with contradictions indicating particular importance of a given structure in one form of learning while showing its lesser relevance in another. This can lead to the conclusion that all the studied structures of the brain are important for learning because of their highly associative nature. However, none of them is specially devoted to learning. Other parts of the nervous system are not less capable of storing memories. Because of the high specialization of certain parts of the brain, selected forms of learning may be strongly impaired in case of one sort of damage while remaining intact in another. For example, it was demonstrated that learning to perceive visual stimuli involves the inferior temporal cortex while defensive behavior and induced food aversion require the amygdala for their acquisition. Special circuits may therefore be involved in visual, olfactory, gustatory, auditory, kinesthetic, proprioreceptive and other memories. To amplify the omnipresence of memory let me notice that it was even suggested that plasticity is an inherent feature of all neuronal circuits [Carlson, 1988], although not all sorts of synapses demonstrated improved conductivity after stimulation. It is known, that even lower sections of the spinal cord can be used to induce learned responses. Patients with a severed spinal cord can be taught to urinate in response to thigh tapping [Wittig, 1984].

It was noticed very early that two distinct components of memory can be distinguished: short-term and long-term memory. For example, ECS (electroconvulsive shock) is known to delete short-term memories. ECS causes a temporary disturbance in nervous activity and makes a patient unable to recall events that shortly preceded the therapy. Old long-term memories remain unaffected by ECS. This fact suggests that memories are initially sustained by means of nervous activity until they become consolidated in the form of long-term memory. Other factors that seem to erase short-term memory are head injuries, anoxia, hypothermia, anaesthesia and certain drugs like ouabain. All of these factors interfere with activity of neurons. The nervous character of short-term memory is also confirmed by the fact that one can retain memories in one’s mind by constantly thinking about them. Hebb (1949) proposed that short-term memory be considered as circular reverberating nervous impulses. This hypothesis was widely considered as correct. However, it can be shown that all evidence supporting the Hebb’s hypothesis can be interpreted without implication of reverberating impulses and an increasing number of researchers is inclined to see short-term memory as a chemical phenomenon [Hubbard, 1975]. First of all, the erasing effect of ECS does not have to be interpreted as caused by the disruption of nervous activity. If we assume that short-term memory has a chemical nature, ESC would add noise to the circuitry by formation of short-term traces in other neurons thus making retrieval of previously formed patterns impossible [Carlson, 1988]. The ability to sustain memory by thinking can also have an interpretation that renders the Hebb’s postulate unnecessary. Let us consider the fact that neurons in the inferior temporal cortex retain information about a just perceived visual stimulus (Fuster and Jurvey, 1981). Some of them respond to particular colors and maintain a high response rate after the stimulus. Electrical stimulus to the region suppresses this activity. The after-stimulus activity may be caused by regular stimulation coming from a reverberating impulse as well as by increased activity of neurons participating in the act of perception. Increased firing might be a form of short-term retention of information in the brain. Cell assemblies or whole neuronal trees or networks might be involved in temporary retention of past excitations in order to allow for such phenomena as trace conditioning (learning requiring association of stimuli separated in time) or rethinking an idea (a mathematician may reissue a command THINK to his problem solving circuitry and the thinking process may be repeated with certain neuronal paths already facilitated). All in all, there must be a form of short-term memory that involves electrical activity of neurons, but it does not have to necessarily involve circular reverberation.

I am personally convinced that what we used to call the short-term memory refers to two different phenomena: electrical and chemical short-term memory. As it was mentioned above, after-stimulus firing of neurons and possibility of retaining of a fact in memory by repeated thinking are cogent proofs of the existence of the electrical form of short-term memory. The function of this memory is to retain information about previous states of the brain for a temporary use. The chemical short-term memory, whose existence I shall substantiate in further paragraphs, has a different function of providing a basis for consolidation of long-term memory. Because of their different functions and uncertain mutual relation the electrical and chemical forms of short-term memory should be called by different names. At the moment I will use the term temporary memory for the former and short-term memory for the latter. This would reflect the fact that temporary memory may not necessarily lead to consolidation of lasting memory traces while short-term memory is a definite step towards long-term memory. The separation of the two concepts does not exclude the possibility that temporary memory is inseparably related to short-term memory (then again using only the term short-term memory would be justified). Regarding the Papez circuits, I believe that they might be storing reverberating impulsation whose significance would not be based on inducement of long-term memory. Such impulsation might be responsible for storing some general information about the state of the brain that constantly modifies action of other circuits. This might include general emotional state of the individual. Papez circuits could also be a basis of the timing circuitry used in the process of stochastic learning (see further).

There are many models of molecular mechanisms involved in formation of chemical memory, but one of them is particularly instructive. In 1977, Gibbs and colleagues proposed a three stage model of memory. As a consequence of smartly designed experiments investigating action of memory abolishing factors, an intermediary stage of memory formation was proposed. The Gibbs’ model included the following stages:

- short-term memory (10-15 min.) based on changes in membrane permeability for potassium

- intermediate memory (30 min.) based on changes in the activity of Na/K-ATP-ase

- long-term memory based on the synthesis of proteins

Existence of the aforementioned stages was concluded from the influence of factors affecting potassium concentration, Na/K-ATP-ase and protein synthesis. It was found that all these factors yielded abolition of memory at different times after learning, thus providing the ground for the model. I believe that if a wider range of inhibitors affecting metabolism of a neuron were studied and more precise measurements were available, we could distinguish more that three stages of memory formation. This leads to the conclusion that formation of memory may be considered as a chain of molecular phenomena that affect conductive properties of synapses. This, in consequence, implies the artificiality of the division of memory to short- and long-term components. Other stages of memory formation might be abolished by various chemical agents considered jointly as short-term memory inhibitors. Finally, I should mention the importance of another factor in memory formation: sleep. It is a well-established belief that the REM phase of sleep is necessary for the formation of long-term memories. Deprivation of this phase slows down learning significantly [Empson, 1970]. The question remains why a special physiological state is necessary for the consolidation of memory? Could not short-memory traces be just converted to more permanent ones during normal, daily activity of the brain? The importance of sleep for intellectual activity is indisputable. In the course of evolution, sleep appears as an accompaniment to development of more sophisticated behaviors which in turn are related to expansion of learning capabilities. A fascinating candidate for solving the conundrum is the Evans theory of sleep being a sort of a garbage-collection procedure. I do not know the argument which led Evans to his theory, but when considering it with reference to studies of sleep in cats, a striking correspondence arises. During the REM phase, the brain stem is believed to send random impulses to the cortex which processes them as if they were normal signals coming from the world of senses. This results in dreams [Hubbard, 1975]. Cortical response coming back down to effectors is cut off in the brain stem so that a dreamer does not struggle with a dreamt-of opponent, perhaps injuring himself. This results in a well-known feeling of being mired in molasses, because efferent impulsation is not accompanied by sensory feedback. The random impulsation from the brain stem might be used in the process of garbage-collection proposed by Evans. We can imagine, that this sleep impulsation is used to discover these synaptic connections which are not coherently placed in the structure of nervous circuits (e.g. being inaccessible). These incoherent connection would undergo erasure in order to reduce unnecessary noise in the system. Thus sleep would be the second element, apart from forgetting, used to optimize the fabric of the brain circuitry.

Interim summary

- Memories are not specifically located in the brain although some particular structures may be specialized to store visual, acoustic, olfactory and other memories

- I use the term temporary memory to exclude the electric phenomena from short-term memory. Short-term memory will be later on considered as having a chemical nature

- Formation of memory is a sequence of multiple molecular phenomena which are roughly divided into short- and long-term memory

- Sleep plays an important role in memory formation, perhaps as a process optimizing the connections of the circuitry

10.2. Stochastic and deterministic learning

In order to develop a molecular model of memory consistent with the SuperMemo theory I have to draw a distinction between the two basic forms of learning: stochastic and deterministic learning. The main motives to do so are:

- Different structure of neural circuitry and above all different learning algorithms affect the applicability of the SuperMemo theory

- Different synaptic configuration involved can entail different molecular mechanisms

The basic difference between the stochastic and deterministic learning is that in the first case, the nervous system has to work out the response to stimuli by trial and error while in the second it is provided with a ready pattern of the desired reaction. Both mechanisms are used jointly in many learning tasks thus their identification in particular cases may not seem obvious. An example of a quite pure form of a stochastic process is later stages of learning to play a short phrase on a musical instrument (later stages are understood here as those that start at the moment when conscious control of motor units is gradually given up). All forms of creative thinking are strongly based on stochastic processes. An example of a deterministic process is learning a list of syllables or learning answers to a set of questions. For a digital illustration of stochastic and deterministic learning and analysis of the computational complexity of the related algorithms see [Wozniak, 1988a].

10.2.1. Hypothetical model of stochastic learning

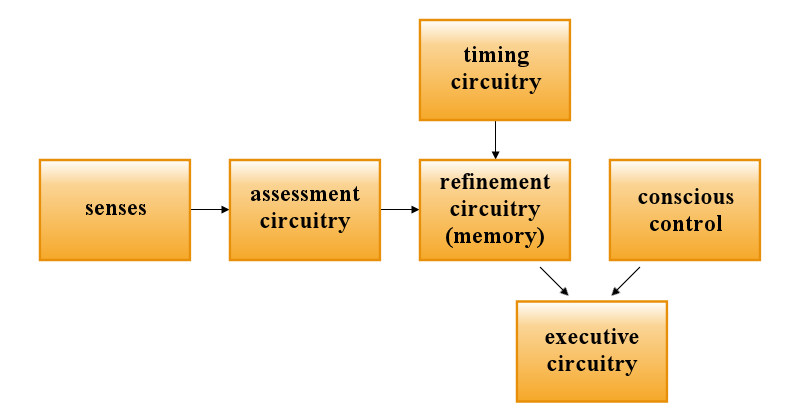

Let us consider learning to play guitar as a ground to analyze possible neuronal circuitry and mechanisms of synaptic facilitation involved in stochastic learning. Imagine a guitarist who wants to acquire a skill of playing a single motif (few bars of music) with maximum possible proficiency. He will probably start with reading the score following it with fingers plucking the strings. After repeating the motif few times he will stop looking at the score since it will have been memorized by then by means of deterministic learning. He will fix his attention on the conscious control of muscles of his arm in order to improve the performance. This conscious guidance provides ready solutions to the nervous circuitry and as such must again be considered as deterministic learning. He might, for instance, change the orientation of the wrist against the neck of the guitar, etc. After certain number of repetitions his consciousness will not have any further role in improving the performance, i.e. deterministic instruction of fingers has been completed and the stochastic stage begins. He might start reading a newspaper while his fingers would continue their action repeatedly. After an hour of reading he would find a significant improvement of the performance. Certainly, this improvement had to be worked out by trial and error by the nervous system itself, because there are no other valid sources of information of how the muscles should work except for the punishing or rewarding feedback from those nervous centers that are responsible for subconscious analysis of the quality of the training. For example, a slip of a finger might generate a punishing impulse whose effect would be to give up erroneous trials. Below a hypothetical model of how the process could proceed on the neuronal level is presented. It is obvious that the neuronal circuitry responsible for the stochastic learning (let us call it the refinement circuitry) must have an interface to the same combination of motor neurons that are used in the deterministic stage of learning (the executive circuitry). On the other hand, there is a need for circular reverberating impulses that would provide timing for the whole process. This timing would be free of conscious control (except for such commands as Faster, Slower, On and Off).

The circuitry providing the timing will be called the timing circuitry. The refinement circuitry would have to be fed with information from the centers analyzing quality of the performance (the assessment circuitry). No doubt, all the aforementioned components are necessary in the stochastic learning system, but their internal architecture as well as anatomical location can be stated only in hypothetical terms.

Fig. 10.1. The block diagram of the neural components involved in stochastic learning.

Figure 10.2 presents an exemplary organization of the refinement circuitry. It is included exclusively to illustrate the process and to allow to draw basic conclusions that will be used in further considerations. To make quantitative consideration easier, the specified number of input and output tracts has been assumed. The timing circuitry sends its signal from 100 neurons, the executive circuitry is supposed to be supervised by 1000 neurons while the assessment circuitry can provide between 1 and 100,000 various input signals.

Fig.10.2. Exemplary organization of the refinement circuitry participating in the process of stochastic learning.

In the model presented in Fig 10.2., no circuitry responsible for stochastic selection of possible control signals is involved. In reality it does not have to be so, but the assumed solution seems to be particularly attractive because the random selection of control paths is accounted for by the properties of the synapses themselves. In the model, it is assumed that an axon can provide no more than ten connections and a neuron can accept no more than ten input signals. These assumptions make considerations easier, but as it will be shown later, the total number of neurons involved in the system becomes much larger than necessary (an average neuron in the central nervous system accepts input connections from about one thousand other neurons [Wittig, 1984]). There are six layers of neurons between the timing and executive circuitry:

- 100 timing neurons

- 10*100 = 1,000 neurons of the first layer of the refinement circuitry (each of timing neurons can activate 10 neurons of this layer)

- 10*1000 = 10,000 neurons of the second layer provided by inputs from the previous layer

- 10*1000 = 100,000 neurons directly responsible for association of executive and timing neurons. These neurons form the addressing matrix of the refinement circuitry

- 100,000/10 = 10,000 neurons of the third refinement layer. Each of them can be activated by one of the ten neurons from the previous layer

- 10,000/10 = 1,000 neurons of the executive circuitry. Each of them can be activated by one of the ten neurons from the last refinement layer

The synapses responsible for changes in the system are all located in the addressing matrix (layer 4). Each of these synapses is in effect a direct connection between a single timing neuron and a single executive neuron. Thus by modifying the state of these synapses, the system is able to encode all possible relations between the timing and executive circuitries.

The system works in the following way:

- Conscious control drives the executive system into action

- Timing circuitry begins to send impulsations (sequentially all of its neurons fire one by one in specified intervals)

- Stochastically, the synapses of the addressing matrix (addressing synapses) reach the level of activation and activate random neurons in the executive circuitry

- If the random impulsations cause detriment to the performance, the assessment circuitry suppresses detrimental changes in the addressing matrix, otherwise they become more and more permanent

- When the level of performance of the refinement circuitry reaches sufficient quality, the conscious supervision is taken off

Fig. 10.3. The exemplary processes occurring in the addressing synapses during stochastic learning.

As it is shown in Fig. 10.3. the addressing synapses reveal a tendency to spontaneously change their facilitation levels, but in the case of stimulation from the timing circuitry, these changes reach the steady state above the limit necessary for activation of the postsynaptic membranes, while in the case of no stimulation, the steady state is just below the activation level. Thus in the period of lack of timing stimulation, the addressing matrix is slowly being erased to a nonactive (nonaddressing) state (forgetting), while upon timing impulsation, the synapses slowly reach the activation level and the neurons of the 5th layer start firing. However, punitive impulse trains from the assessment circuitry will cause significant suppression of the strength of addressing synapses, while the rewarding trains will strengthen the changes further. Note that with the decreasing percentage of wrong firing the average facilitation of synapses will increase. If a single stochastic firing in the addressing matrix occurs once in a second then the process of examining the whole matrix would take about 30 hours. The number of neurons in the refinement circuitry is here equal to the product of the numbers of neurons in timing and executive circuitries. However, it is easy to conceive that the addressing matrix is not a complete one. In fact it requires only as many synapses as many associations are required between timing and executive impulses. Obviously, the more sophisticated circuitry would be required. Moreover, if we admit that a neuron can accept 1000 synaptic connections the whole network could be enormously simplified. Note that the conscious control does not affect the addressing matrix. It only provides a basis for the process to start. One could conceive a more elegant model in which the conscious impulses would prepare a rough setting of the addressing matrix thus significantly decreasing time overheads for stochastic learning. Such a model, however, would entail substantial complication of the circuitry (how to pass information back from the executive circuitry to the addressing matrix?). A very interesting conclusion can be drawn concerning the probable synaptic configurations involved in the process of stochastic learning. There are two basic forms of synaptic facilitation: presynaptic and heterosynaptic facilitation (see Fig. 10.4).

Fig 10.4. Configurations involved in presynaptic and heterosynaptic facilitation.

I hope that the presented example of the circuitry involved in stochastic learning allows to visualize the fact that no matter how we redraw our hypothetical neural network, we will always end up with a situation in which impulses descending from the timing and assessment circuitry meet together and result in facilitation (or suppression as in the example). The heterosynaptic facilitation requires that the modified synapse receive a signal from the modifying one via depolarization wave of the postsynaptic membrane. This would result in noise in the executive circuitry each time a rewarding or punitive impulse is sent. The presynaptic configuration allows for direct modification of the timing synapse by the axoaxonic connection. This would strongly suggest involvement of presynaptic facilitation in stochastic learning.

10.2.2. Hypothetical model of deterministic learning

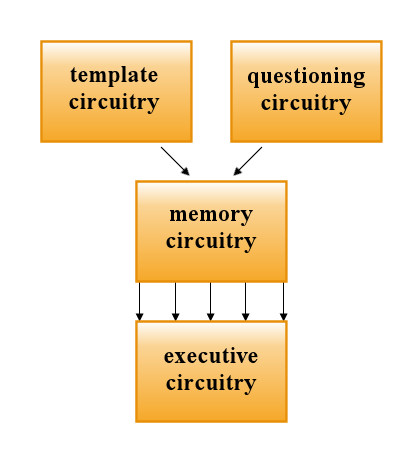

To make my considerations easier let us imagine a student who speaks English and wants to memorize vocabulary of Esperanto. He will be shown an English word and his task will be to learn the Esperanto equivalent. In this case four neural components will be required:

- questioning circuitry will generate impulses that encode the pattern recognized as a question (e.g. impulses resulting from recognition of an English word “river”)

- template circuitry will generate executive impulsation encoding the correct response to the question (e.g. impulsation resulting in pronouncing an Esperanto word “rivero”)

- memory circuitry will be able to associate template impulsation with the questioning impulsation in the very same way as unconditioned and conditioned stimuli are associated in the process of conditioning

- executive circuitry will be able to execute commands coming from the template circuitry, and later also from the questioning circuitry

Fig. 10.5. The block diagram of the neural components involved in deterministic learning.

Again to establish a clear-cut reference, we will assume that both the questioning and template circuitries provide impulses from 1,000 neurons each. Note that it is theoretically possible that each of these neurons can be responsible for generation of impulses coding for a single word only, thus the system presented below allows for as many as 1,000 words to be memorized.

Note that the model presented below implies that the whole cerebral cortex is able to associate between no more than 100,000 stimuli (equivalent of a lexical competence of an average speaker). However, optimization of circuitry and increase of branching could increase this capacity manifold.

Fig. 10.6. Exemplary organization of the neural network involved in deterministic learning.

In the model presented in Fig. 10.7, there are seven layers of neurons:

- 1 Layer of template and questioning circuitry – 2*1,000 neurons

- 2-3 Two layers that expand connections of the first layer before forming the addressing matrix (20,000 and 200,000 neurons)

- 4 The addressing matrix of neurons that receive direct impulses from a specified pair of neurons (one of them belongs to the questioning, the other to the template circuitry). The addressing matrix comprises 1,000*1,000 = 1,000,000 neurons

- 5-6 Two layers that focus connections of the addressing matrix before forming the executive circuitry (100,000 and 10,000 neurons)

- 7 Input layer of the executive circuitry (1,000 neurons)

In the process of learning, presentation of a question and answer causes the impulsation from the corresponding neurons in the questioning and the template layers come down the system until they meet in one of the million neurons in the addressing layer. This fact will cause facilitation in the synapse connecting the questioning circuitry with the neuron in the addressing matrix so that later, activation of the questioning circuitry alone will produce the correct response.

Each of the neurons in the addressing matrix receives impulses from only one pair of questioning-template neurons and addresses only one neuron in the executive circuitry. As in the stochastic case, optimization of wiring and increased branching of the network would decrease the number of neurons required manifold (e.g. 1,000 dendrites per neuron would allow for the total elimination of the network in the example). Let us now consider the possible synaptic configurations involved in deterministic learning. If we consider the fact that the template axon alone should be able to induce depolarization of the executive neuron then we directly come to prefer the heterosynaptic solution. The template axon would play a role in facilitating the questioning synapse only once – at the moment of first association of the template and questioning impulses. This association would be sufficient to induce synaptic changes allowing depolarization by the questioning neuron alone. In this case, the mediation of the postsynaptic membrane in the signal transmission between the synapses does not induce noise because at any rate the template neuron would cause depolarization of the executive one. Thus the argument that led to the selection of the presynaptic model for stochastic learning does not hold. The distinction between stochastic and deterministic learning is not a purely theoretical one. It was observed that patients with anterograde amnesia can, to a certain degree, learn various motor skills, although are unable to recall the fact of learning. This led Squire (1982) to propose existence of two distinct sorts of knowledge: procedural and declarative [Butters, 1984]. It is possible that the two kinds of knowledge distinguished by Squire are acquired by means of stochastic and deterministic learning respectively. Thus amnesic patients can master procedural (stochastic) skills in spite of being unable (because of the damage to the temporal cortex) to form new declarative (deterministic) memories. Hubbard [1975] mentions the fact that motor skills (note that most of them basically require stochastic learning) are much better remembered than verbal ones (deterministic). This would agree with my observation that a SuperMemo schedule for stochastic learning is possibly a less dense one (see Chapter 9). Finally, it should be noticed that stochastic and deterministic learning are not the only forms of learning in existence. Such phenomena as habituation and sensitization were shown to have a different nature in selected cases. For example, in Aplysia californica, a specialized circuit exists to that end. Stimulation of a given neuron causes sensitization of another one without active participation of the latter. This, however, requires a neural network whose structure is strictly determined by genetic factors and therefore seems to be of lesser importance in humans (perhaps except for more primitive conditioning involving phylogenetically older circuits). In the following part of the chapter I will focus the attention on deterministic learning which was the subject of most of my experiments on human memory. Nevertheless, some of the presented concepts and conclusions may refer to stochastic learning as well.

Interim summary

- A distinction between stochastic and deterministic learning was proposed. Stochastic learning refers primarily to acquisition of motor skills while deterministic learning is used in most of forms of textbook education

- Heterosynaptic facilitation was shown as more likely involved in deterministic learning. Similarly presynaptic facilitation was proposed to be associated with stochastic learning

- Further analysis of phenomena related to memory will be restricted to deterministic learning